In August 2021, the number of journals included in the Cabells Predatory Reports database reached 15,000, and we declared at the time that it represented a ‘mountain to climb’ for many researchers, as other integrity issues were proliferating. Sadly, this mountain has only grown, with the number of predatory journals listed in Predatory Reports now passing 20,000 (20,274 to be precise).

While the rate of growth has slowed a little, AI technology is now boosting academic outputs – helping some scientists do more research, yet also helping others to write more papers. Undoubtedly, this is helping to drive up submissions to all journals, including deceptive ones. Predatory journals offer an easy solution to unethical authors seeking publication, and it’s even easier if AI has written the article for them.

Fast Ascent

Cabells first started work on a database of predatory journals in 2015. At the time, there was much interest in Jeffrey Beall’s lists of predatory journals and publishers, building on the work he had done researching the phenomenon and highlighting the problems it was causing researchers, universities, and funders. However, concerns were also being raised about the subjective nature of the lists, which often identified journals and publishers with unusual or poorly communicated Open Access policies.

When Beall sunsetted his lists in early 2017, Cabells had already completed much of its development for what became known as Predatory Reports, which launched later that year with around 7,000 journals. This grew to 10,000 by 2018, 15,000 by 2021, and now 20,000 in 2026. While the rate of increase has slowed a little in recent years, this is in part to some changes we have made on the back end to improve workflows. Additionally, we have seen some increases in the number of articles appearing in predatory journals, which may be a result of the increased ease with which articles can be created using AI.

Easy path

This path of least resistance appears to be fueling the growth of articles published unethically, either in predatory journals or in legitimate journals having passed unnoticed through peer review. How can this current trajectory be checked? There have been several research and publishing integrity solutions that have come onto the market, which range from tools that try to spot the use of AI in papers to beefed-up protocols for managing it.

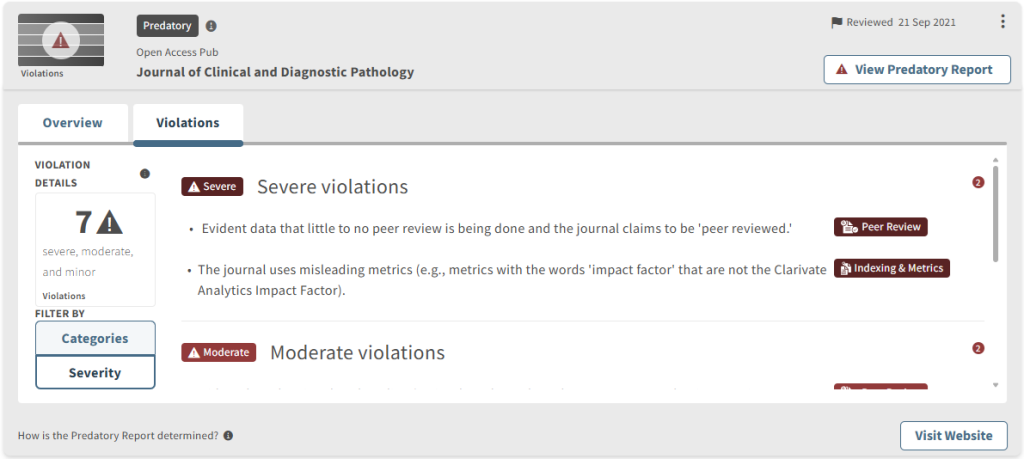

For Cabells’ part, we have been working to improve our capabilities in detecting and reporting on predatory journal activity, including upgrading the criteria we use for identifying them. We enhanced nearly half of the criteria, which will enable us to more easily assess predatory activity in the context of improved technologies. We are also working on ways to expand our overall assessment of journals to provide more nuanced detail, as well as integrate with researchers and management systems. More than a decade after its initial development, in many ways, the climb is only just starting.