Generative Artificial Intelligence (AI) platforms, such as ChatGPT, have recently undergone a meteoric rise in their sophistication, accessibility, and prevalence throughout nearly every industry. Scholarly publishing and communications are no exception: it seems that every LinkedIn feed and conference program is teeming with discussions of these platforms. Depending on the opinion of the authors, generative AI can seem like the scholarly publication professional’s new best friend or worst ever threat. Here, we aim to provide an unbiased, factual guide so you can understand what generative AI is, its current implications for our industry, and what you need to know about adapting to this rapidly evolving paradigm shift.

What is Generative AI and ChatGPT?

Let’s start with the basics. Artificial intelligence, or AI, is a widely-used but poorly understood term. AI generally describes a subfield of computer science focused on simulating human-like intelligence and problem solving through complex, highly detailed algorithms capable of processing massive amounts of data. ‘Machine learning’ and ‘deep learning’ are two commonly used terms that describe methods by which extremely large amounts of data, or ‘big data’, can be synthesized and used to create complex and advanced computational and problem-solving systems.

The ChatGPT platform acts as a bridge between humans and AI. ChatGPT itself is an ‘AI chatbot,’ meaning that it’s a software that simulates human conversation to allow us, the users, to interface with advanced AI systems on almost any imaginable topic. In practice, ChatGPT looks like a standard messenger platform or chatbot that you would use to talk with another person over the internet, such as Zoom or Facebook Messenger.

ChatGPT can answer a user’s questions by synthesizing information sourced from many corners of the internet. Asking the same exact question multiple times can generate different responses, though the content is generally consistent. Though ChatGPT isn’t the only currently available AI chatbot, it’s generally the most widespread and recognizable.

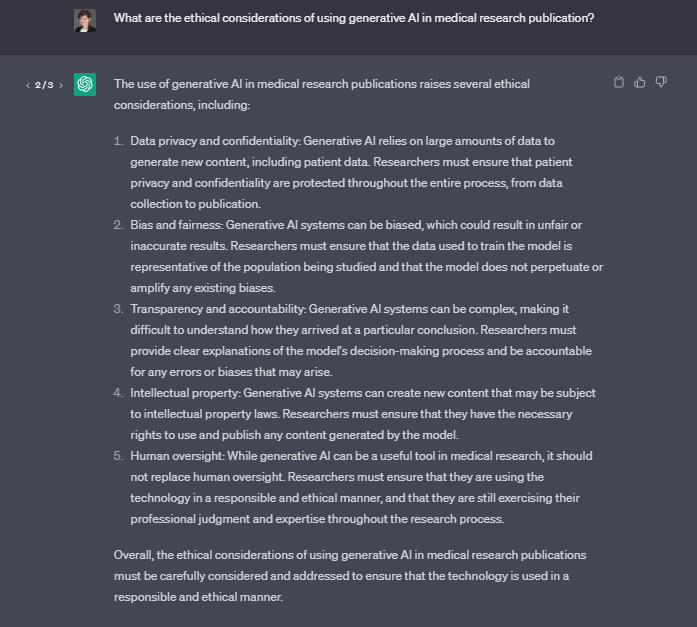

Seeing the chatbot in action can go a long way in understanding its utility and risks. Click here to see three example responses generated by ChatGPT for the question, “What are the ethical considerations of using generative AI in medical research publication?” This hyperlink requires an OpenAI login to access, so screenshots of these responses are also included at the bottom of this article.

Generative AI and the Medical Research Publications Industry

Generative AI has several direct implications for the medical research publications industry, many of which relate to its use in generating sections of manuscript text. Because of how new generative AI is, its rapid pace of evolution, and unclear future legal restrictions on its access, some of these implications and future projections are inherently speculatory, but we’ve aimed to focus on the current state of generative AI use for medical publications.

Plagiarism in manuscript content generation

One of the most fervently debated aspects of AI-generated text in medical manuscripts is plagiarism and source citing. Proponents and detractors generally agree that many current AI chatbots do not provide adequate source citing for the information they use to formulate responses.

Main takeaways: Current plagiarism screening software do not effectively catch potential plagiarism from AI-generated text. AI chatbots seem to be here to stay; though many efforts are currently being made to develop software capable of detecting AI-generated text, it’s unclear how successful these ventures will be.

Listing AI chatbots as manuscript authors

Opinions on the ethics of using AI chatbots for manuscript generation are widely ranged. However, now that AI chatbots are available and widely accessible, it’s inevitable that at least some authors will choose to use them. Industry leaders have reached a mostly ubiquitous agreement on how its use should be disclosed.

Main takeaways: Currently, publication ethics leaders and most major journal publishers agree that generative AI chatbots should not be listed as authors on research papers. Use of these platforms in manuscript generation, including image creation, must be disclosed in the Methods or the Acknowledgments. Check with a journal’s editors or publisher for specific disclosure guidelines.

Incorrect/Incomplete Information from ChatGPT

One of the primary limitations and dangers of AI chatbots is that the information they generate may be inaccurate, biased, discriminatory, or inappropriate. Worryingly, AI chatbots are great at making information that sounds legitimate and is well-written but includes incorrect information or little actual content at all. Additionally, unlike search engines like Google, AI chatbots can’t stay up-to-date on current events: for example, ChatGPT has a knowledge cut-off, meaning that it can only source information published before this time. Its responses will not reflect any new discoveries or research published after its knowledge cutoff.

Main takeaways: If you or an author plan to use ChatGPT for any phase of research from idea generation to manuscript text production, take care to manually review its responses for their accuracy and correctness. Be sure to consider and include new articles published after ChatGPTs cut-off date. Additionally, recognize that chatbots cannot be held at all legally accountable for their responses, and all responsibility for the content falls onto the user.

Natural Language/Plain Language Generation

One unique aspect of AI chatbots is their ability to translate scholarly content and jargon into plain language. Though this potential is promising to allow for more of the public to access and understand medical content, this also comes with a wide range of concerns, including inaccuracy of the generated content and loss of crucial nuance through the translation.

Main takeaways: Any content generated by an AI chatbot must be critically reviewed for accuracy. All content should be thoroughly edited to ensure that all necessary detail and nuance are retained in patient-facing materials. Be sure to disclose your use of the AI chatbot with the content publisher.